visits

230

votes

12

votes++Vote positively this post :)

+21

votes--Vote negatively this post :(

-9

Ghz: Why are stalled?

In first place. What are the hertz? The hertz or cycle by second, are units of measure of the clock frequency, that is to say, the number of times, in one second, that the clock signal goes up and down, making the processor work at every cycle, changing the state its transistors. The units of measure associated are the following:

1 GHz = 1.000 Mhz = 1.000.000 Khz = 1.000.000.000 hertz.

Before of 2003 the clock frequency was increasing always at the same rate. The people show off the Mhz’s of their processors, because with the ones that exist at that time many times was true that with more Mhz’s more fast was the processor.

That is because transistors more smaller could change of state more fast, allowing increase the clock frequency. But with the augment of clock frequency, also rises the dissipated heat and the electrical consumption, making impossible increase the frequency at certain limit.

The best way to know what processor is better, have been always trying the performance of the applications that are going to be used on them. The fact that someone tell us the clock frequency of two processors, don’t help to know which one are faster (except when they have the same architecture).

When you go to buy a processor, the best choice is select the processor that has the more recent architecture. For instance, a Core i7 with 2Ghz have a better throughput than a Quad Core with 3Ghz, because the first is more recent than the second.

At the same time and curiosly, began to not be viable explode more the parallelism at instruction level. The responsible of run out of this optimization way, was the increase of the cost of add additional hardware that allow find instruction that not depend of other instruction to execute it in parallel, because this cost have raised every time more, with the increase of parallel instructions.

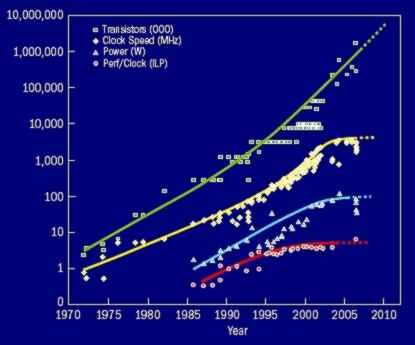

This graph of D. Paterson of the University of California, shows the evolution of the parallelism at instruction level (red line), the increase of the consumption (blue line), the frequency of the processor (yellow line) and the increase of the transistors (green line).

As the increase of frequency and the instruction level parallelism was optimization ways exhausted, the engineers had to search other ways to take profit from the increase of transistors, and began to add parallelism at processor level, processors of 64 bits and large caches.

The processor parallelism is the way that more increase the performance. Every 24 months appears a new processor with the double of cores, making the desktop computers more similar to the supercomputers, that have hundreds of processors.

The problem that has this increase of cores is that the programming is more complex, making that the software didn’t can adapt sufficiently faster.

Most programs don’t parallelize its algorithms wasting the resources of these new processors. At the moment, only some games and 3D graphic design programs can take advantage of these processors.

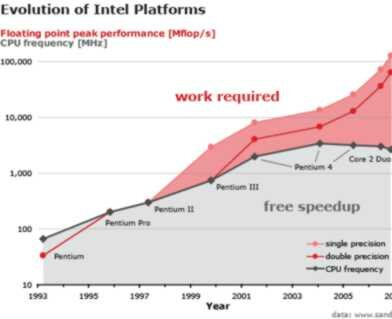

This graph shows the effort required for the programmers to take advantage of the power of the processor (red area), compared with the increase of performance without the need of the intervention of the programmer (grey area). It can be appreciated clearly that the appearance of new instructions and the change, at the 2004, of optimize with the augment of the frequency, to optimize with the increase of the processors, have triggered the effort needed of programming to obtain the best throughput.

At this rate, the only one solution will be make the compilers learn to parallelize the algorithms by themselves. Until then we will continue buying more powerful machines that we can’t take the maximum advantage.